DanceCraft: A Music-Reactive Real-time Dance Improv System

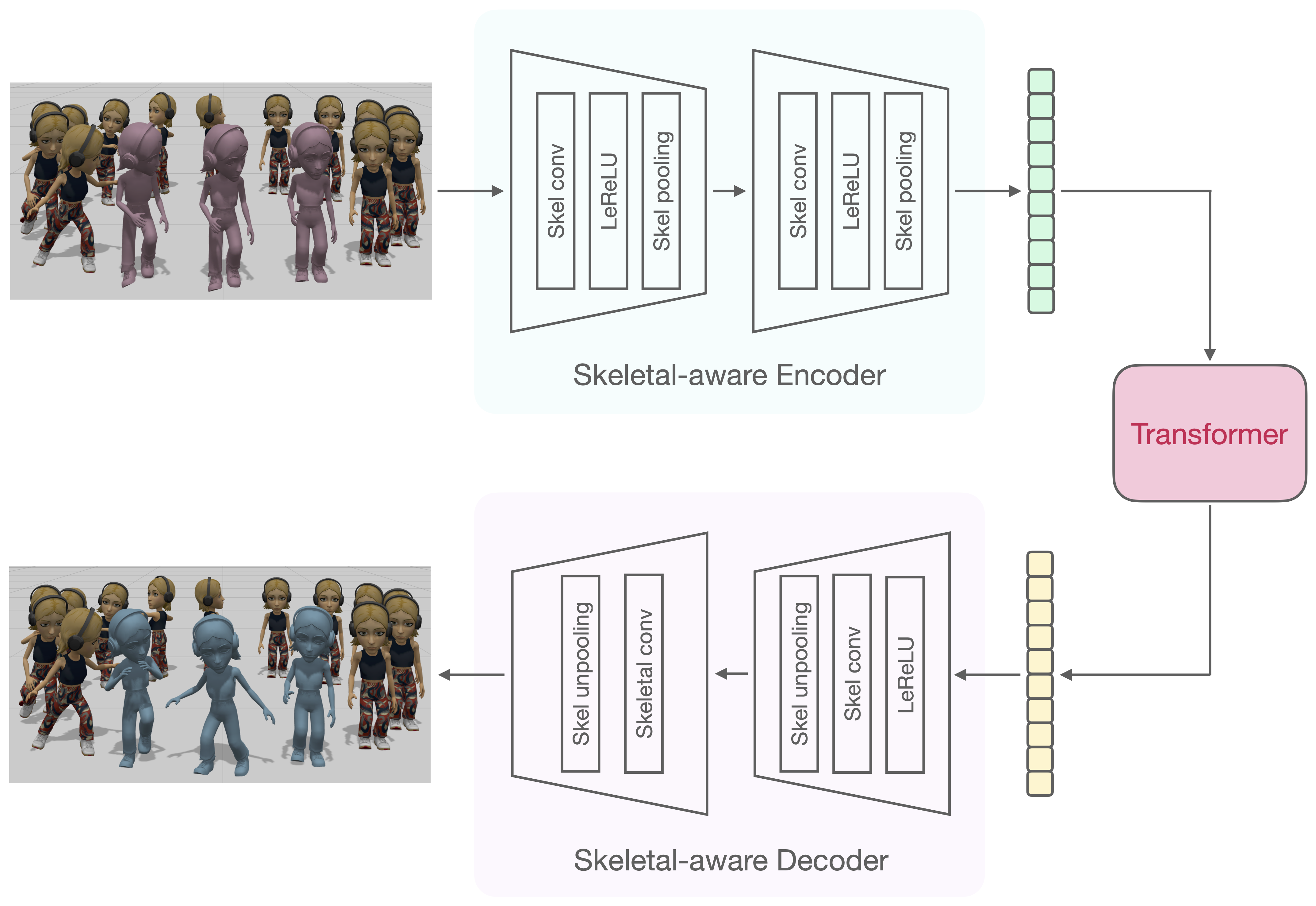

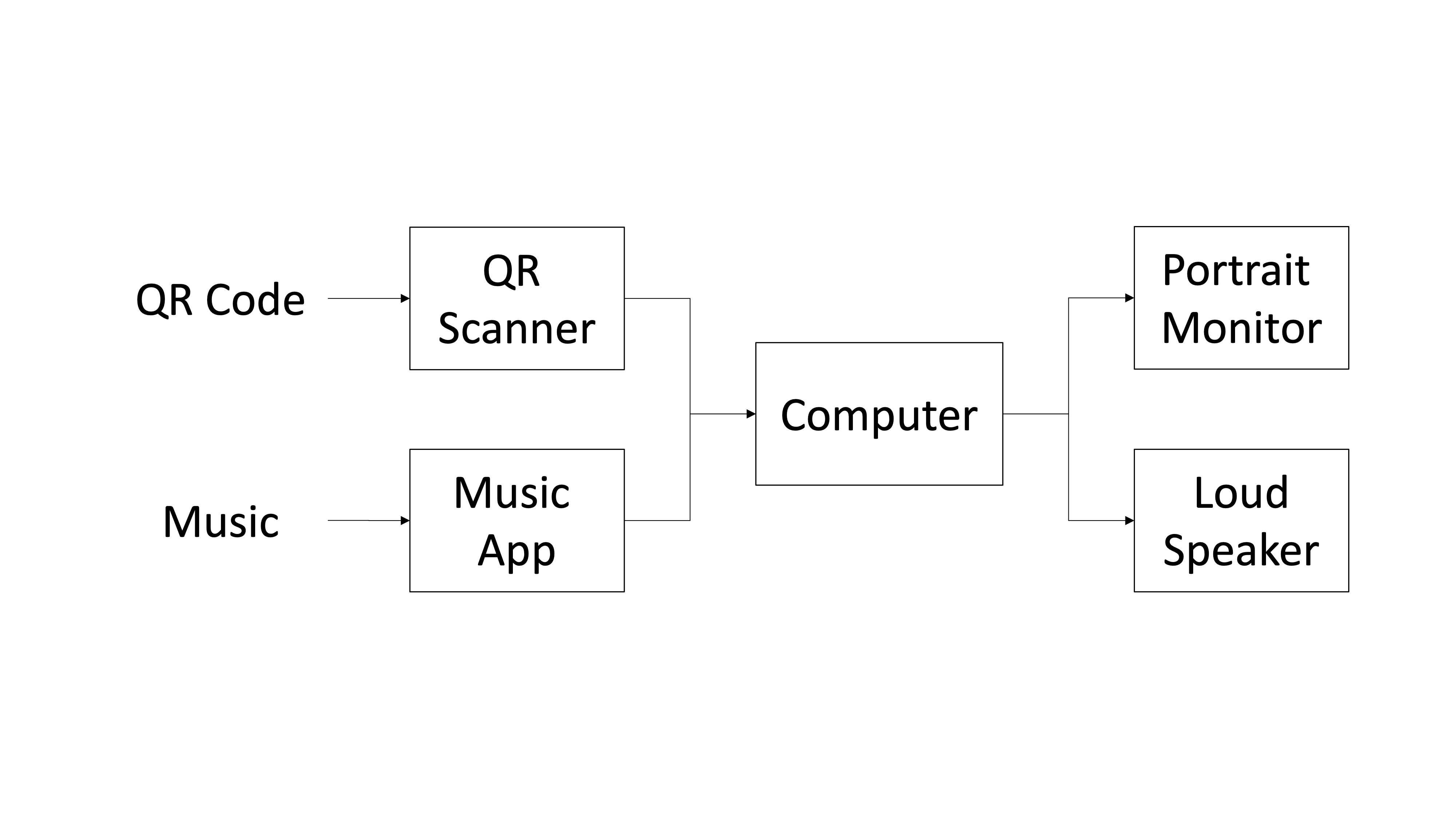

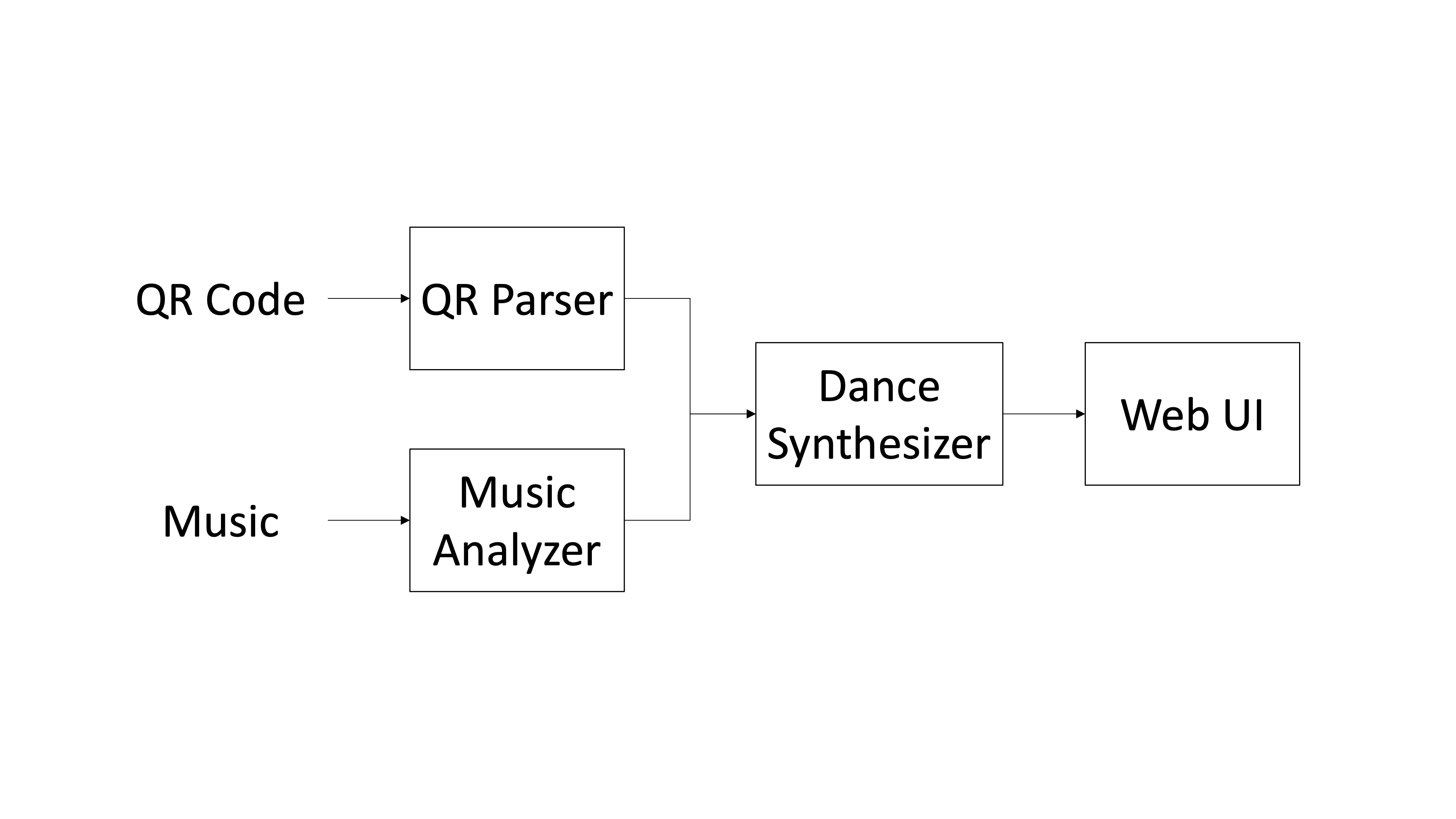

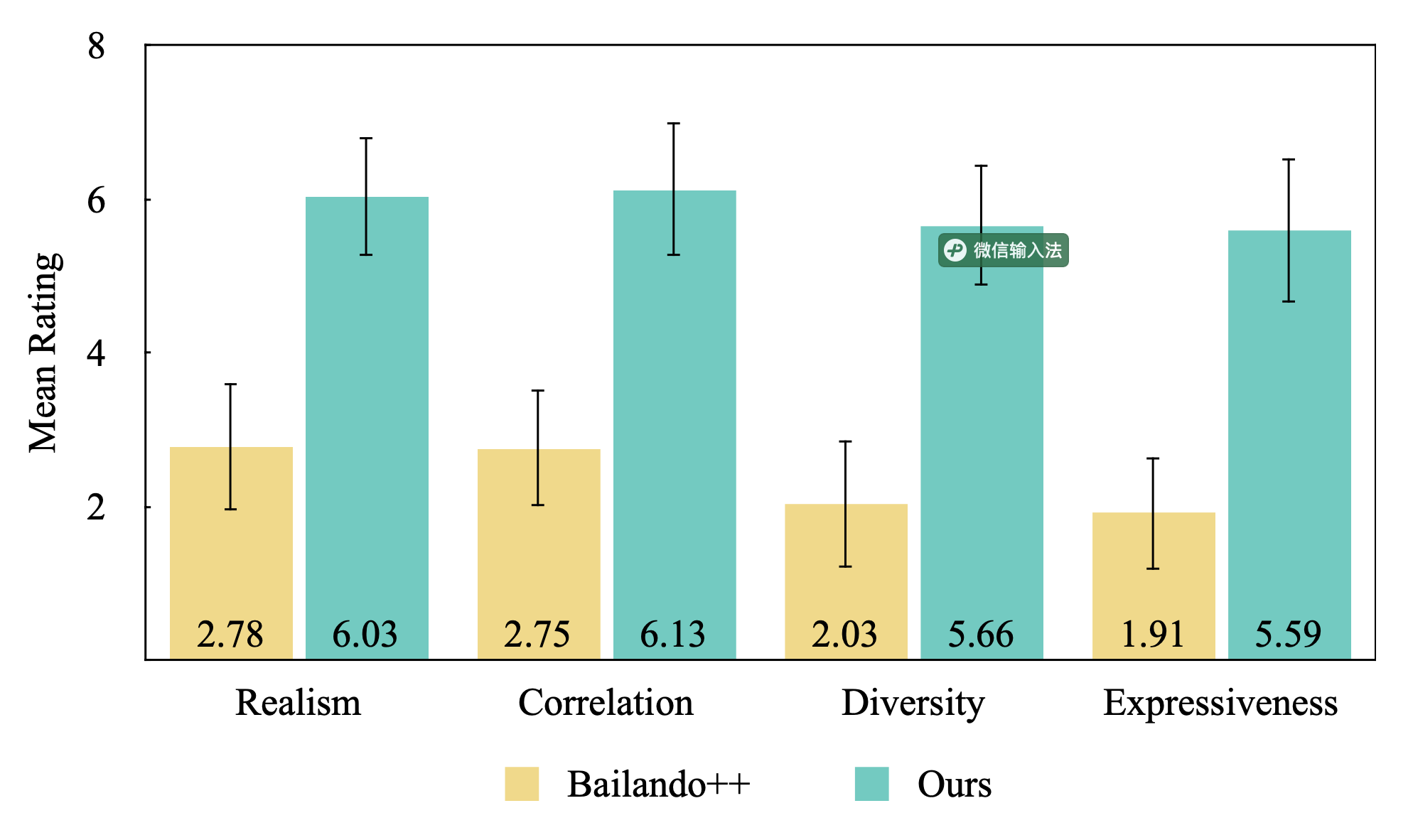

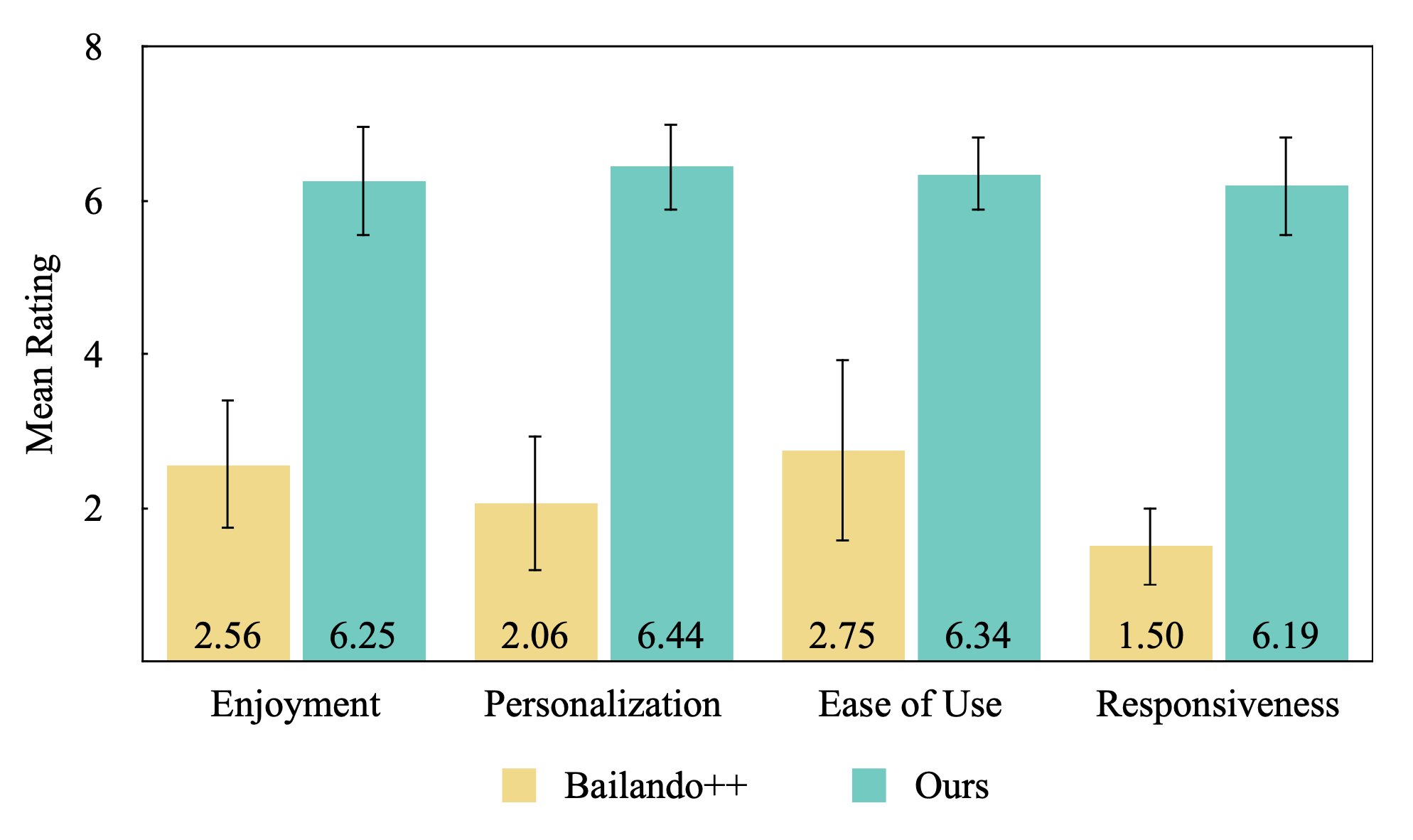

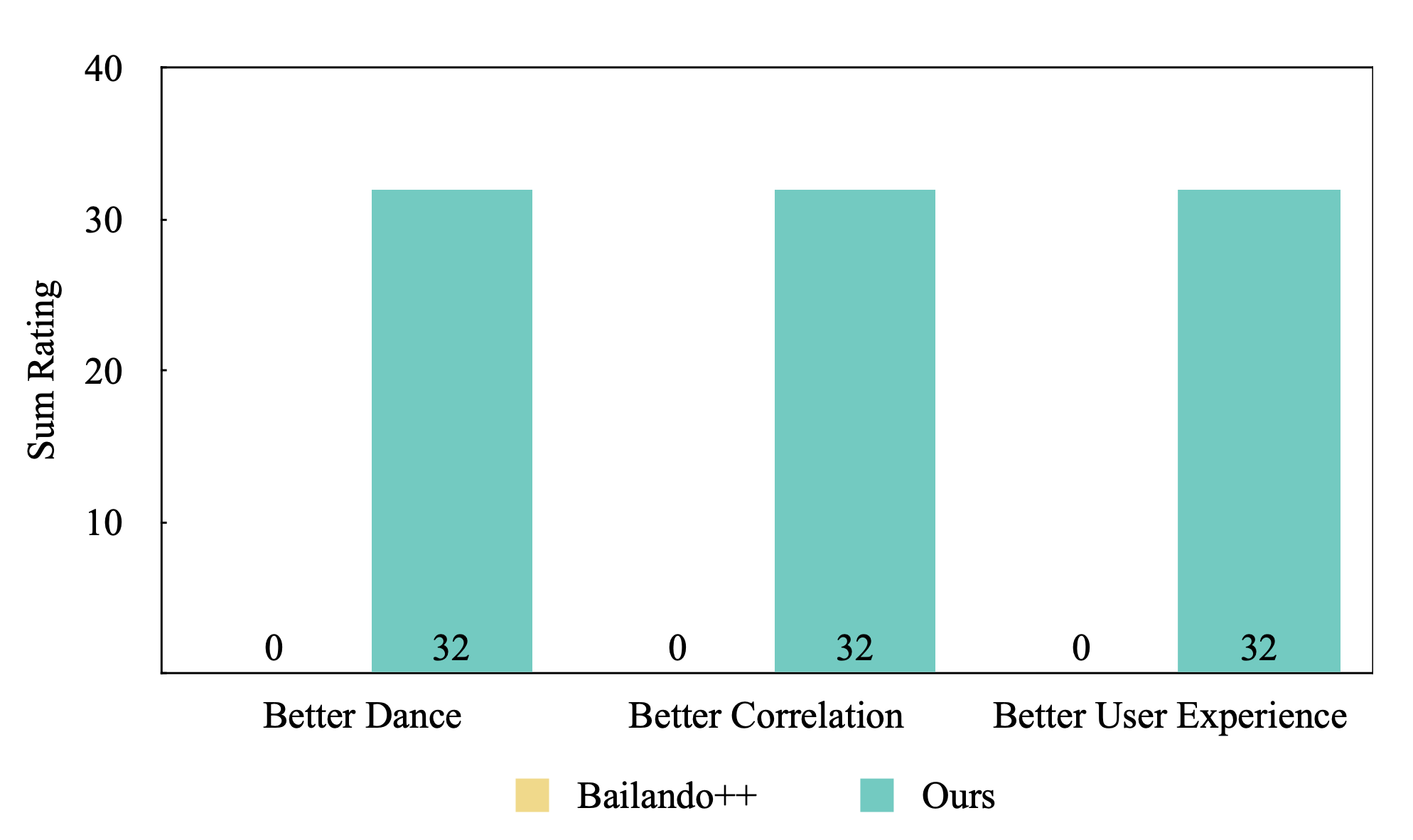

Automatic generation of 3D dance motion, in response to live music, is a challenging task. Prior research has assumed that either the entire music track, or a significant chunk of music track, is avail- able prior to dance generation. In this paper, we present a novel production-ready system that can generate highly realistic dances in reaction to live music. Since predicting future music, or dance choreographed to future music, is a hard problem, we trade-off perfect choreography for spontaneous dance-motion improvisa- tion. Given a small slice of the most recently received audio, we first determine where the audio include music, and if so extract high-level descriptors of the music such as tempo and energy. Based on these descriptors, we generate the dance motion. The generated dance is a combination of previously captured dance sequences as well as randomly triggered generative transitions between different dance sequences. Due to these randomized transitions, two gener- ated dances, even for the same music, tend to appear very different. Furthermore, our system offers a high level of interactivity and personalization, allowing users to import their personal 3D avatars and have them dance to any music played in the environment. User studies show that our system provides an engaging and immersive experience that is appreciated by users.

Project information

- Category Real-time, Music Reactive, Dance Generation, Deep Learning

- Publication Page ACM Digital Library

- Paper PDF